The Client

The client has a design online blog that offers a unique perspective on many topics: tattoos, tattoo ideas, graphic design, wallpapers, nail designs, hairstyles, and more. They publish listicles and blog posts covering the world of design. Their website creates content such as tutorials, articles, roundups, how-to guides, tips, tricks, and even graphic design hacks.

The Problem

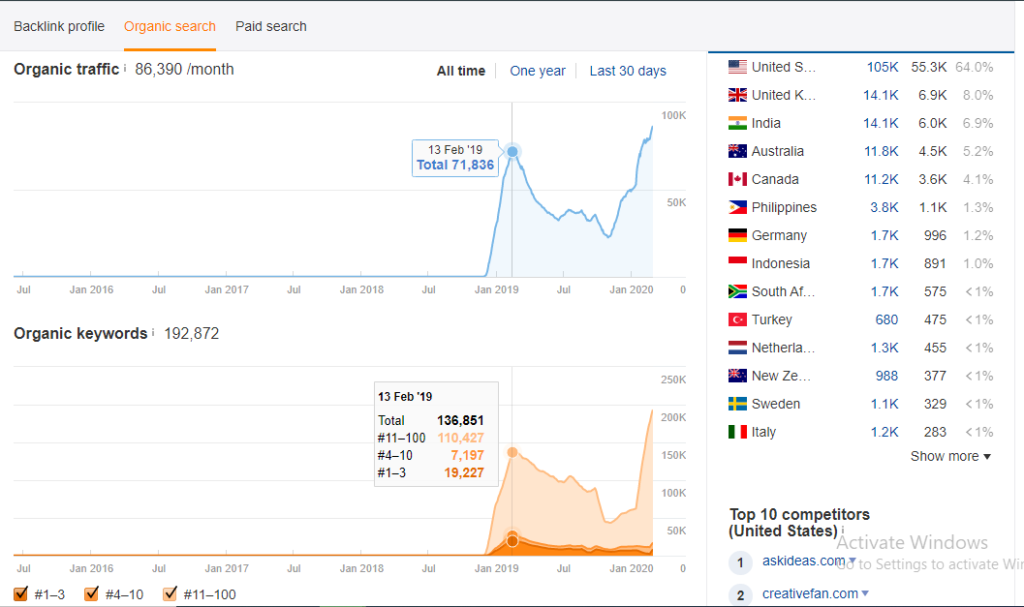

The website had over 20,000 Web Pages. In February 2019, they had a solid amount of viewership, averaging 71,000 views every month. As they were an established website, they had a huge collection of content that caters to several niches. However, their online traffic started to plummet. Below is a timeline demonstrating what happened prior to and after signing with To The Top.

Design Client Organic Traffic Timeline

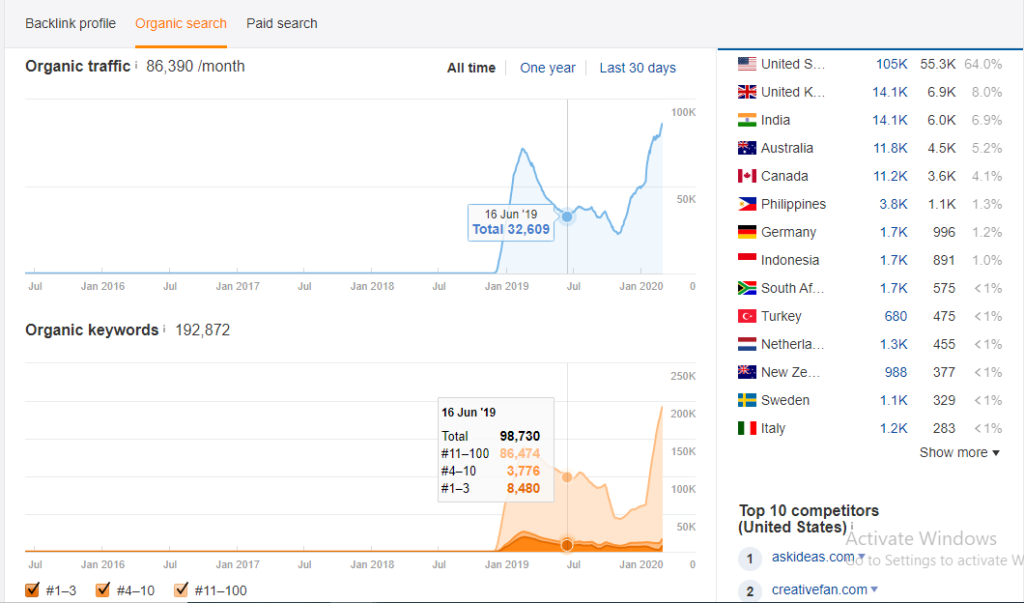

Organic Traffic June 2019

Google’s June 2019 Algorithm update hit the site hard. Their viewership tanked, reaching its lowest at 32, 609 views per month. It didn’t help that their competition was simultaneously exerting effort in improving their on-page content

Organic Traffic August 2019

Responding to the Google August 2019 Algorithm update, the website’s views recovered slightly and reached 37,886 views per month before continuing its downward trend.

Update August 2019

The client enlisted the help of To The Top Agency to recover their audience. We did an extensive Website Quality Audit, including SEO Audits, to determine the reasons for the website’s decreasing rank. The strategy that we employed was tailor-made based on the data and insights that we gathered from the website quality audit. The main goal was for the website to appear at the top of Search Engine Results Pages once again.

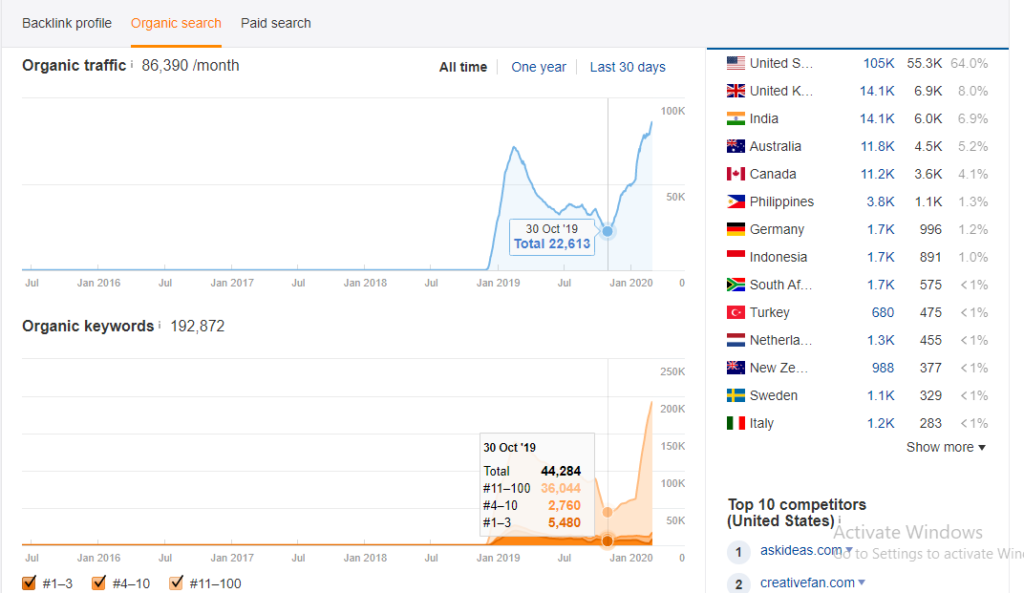

Organic Traffic October 2019

While in the middle of our planning phase in October, the client site hit an all-time low of 22, 613 views.

Planning: November 2019 – February 2020

We started to put our plans into action i.e. on-page edits, content optimization, fixing Site Speed, etc. The numbers clearly showed how effective our strategies were as the site has since seen a steady increase in their online traffic, even bypassing their original numbers with a traffic increase of over 300%.

Website Quality Audit

Phase One: Link Juice and Content Optimization

Figuring out why the site’s online traffic plummeted was definitely a head-scratcher for the client. They used to be a leading figure in the design industry and the search engine results pages. Suddenly, their audience was dwindling down.

After the client signed with us, we tried employing the standard SEO process: Link Building and Content Optimization. This was phase one of our SEO strategy.

Link Juice

In the SEO industry, there is a term called “link juice.” Link juice is the hypothetical value that is passed through hyperlinks from one page to another. Essentially, links are considered as upvotes by other websites, thereby convincing Google that your page is high-quality and should be ranking high on Search Engine Results Pages.

There are two types of links important to SEO: Backlinks and Internal Links, both of which we optimized for the website.

- Backlinks

A backlink in a web page is a link from some other website to your own web resource. It is the online version of a citation in an academic journal. The fact that you get cited means that other websites view you as a credible source or an authority figure in your industry. Two types of Backlinks exist:

- Inbound Link

An inbound link is a link coming from a different website and redirecting the user to your webpage. We searched the web for mentions of the client’s site that are not linking to us.

We, then, reached out to the webmaster and asked if they can add a link to our website. At the same time, our link builders also fostered relationships with other relevant websites whose content would benefit from having our link inside it.

- Outbound Link

An outbound link is a link redirecting the audience of the client site to a different website. We made sure that all of the outbound links inside the client’s pages went to the right sites and that all the links worked. Wrong links can hamper the user experience which is bad for your website’s ranking and reputation.

- Internal Links

Internal links are links on a website redirecting the user from one page to another page that is still in the same domain. We made sure that all of the internal links inside the client’s website are perfectly running. The goal of internal links is to keep the user in our client’s site for as long as possible. There are two ways to do this: continuously redirecting the audience to different webpages after they’ve finished with one or by publishing long content.

Results

In an ideal world, we would report that our initial strategy worked perfectly. The client’s website ranking became the highest in the industry. Their online traffic quadrupled. Their revenue multiplied. And everyone clapped.

Unfortunately, we do not live in a utopian world where we get everything we wish for. Our initial efforts to improve the client’s rankings did not achieve the results that we were hoping to get. This situation was unlike our previous clients where our link building and content optimization process mostly did the trick. The results led us to believe that there was something deeper going on here.

Phase Two: Content Creation, Technical Audit, Improvement of User Experience

Our team decided to dig deeper into the root cause of the problem. Using Google Analytics, we analysed our client’s rankings in comparison to their competition. We tried to determine what is causing their traffic loss. Are there pages whose ranking did not tank? What’s the difference between these pages and the pages that lost online traffic?

We ran our client’s website through different SEO tools to determine: rank analysis, User signal metrics, Click-through Rate, Average Time On Site, Average Pages Per Session, etc.

To The Top has a unique approach to SEO in that all strategies employed are data-driven. The SEO industry is filled with baseless rumours and age-old strategies that have long been phased out.

We have expert data analysts as part of our team that transforms the data taken from SEO tools (i.e. Ahrefs, Google Analytics, etc) into valuable insights that we can work with. There were three factors that remained to be checked: Poor Content, Technical errors, and hampered User Experience.

Content

One of the crucial ranking factors for Google is the content of the website. Gone are the days when spammy websites with poor content ruled the top of the search engine results pages only because they had 100 keywords stuffed inside of them. With all the recent updates that Google has been rolling out, the algorithm can now differentiate good content from bad content. Aside from grammar, Google also now checks for relevancy, content length, and even the depth of information shared. Our team decided on 3 ways to improve the client’s content.

- Lack Of Relevancy

We analysed the client’s website and the Search Engine Results Pages. Our writers manually inspected the client’s pages and found out that there is some dissonance between the user’s search intent and the pages that are ranking. We ran extensive efforts to ensure that all the content in the client’s pages are relevant to the search intent of the user.

The main priority of Google is to provide its users with the most relevant and useful content for them. Websites can get penalized and sanctioned when Google’s algorithm finds out that their content is not relevant to the keywords that they are ranking for.

- Poor Writing and Thin Content

Aside from the lack of relevancy, the client’s website also had poor content. The webpages were filled with grammatical errors, typos, and content that could be described more as word vomit than actual coherent sentences. To fix this issue, we spent a lot of efforts making sure that all the webpages had well-written content in them.

Another factor that we had to check was thin content. Thin content is content that adds little to no value to the website. Pages with duplicate content, too little writing, and even scraped content fall under this category. We used Siteliner to analyze our client’s website and identify any duplicate content, broken links, and even the internal page rank.

- Under Optimized Content

Content optimization was part of the phase one of our strategy. However, for phase two, we had to polish our efforts. Each page was given a specific keyword to rank for. We also realized that many pages were missing their h1, h2, and h3 headings.

Technical Audit

To begin with, it is best to know that Google uses hundreds of ranking factors to determine the order of results to show on their Search Engine Result Page. One of the biggest factors that can affect a website’s ranking is the website’s technical performance.

Running a comprehensive website quality audit on all of the website’s 20, 000 web pages confirmed our theory. A major reason why the site’s organic traffic tanked was the presence of technical errors on their website.

Below, we put together the most important actions we took from the Website Quality Audit that helped the website to recover its organic traffic and soar up in the rankings.

Technical Audit

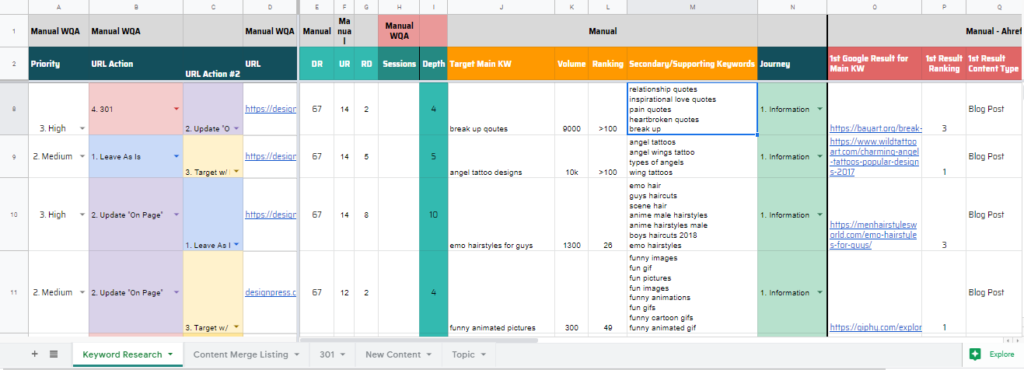

We created a document to track all of the changes that needed to be done on all of the site’s pages. (see screenshot below) It was important that all of these pages be fixed immediately as it affected the user experience of the site’s visitors. All of these technical issues can greatly contribute to the bounce rate of their website, which is a heavy factor for Google. You want to keep the client for as long as possible in your site.

We created a list of guidelines to determine which action to make depending on the condition of the page and its website traffic. Each page had to be designated to a specific action.

- If the page was receiving organic traffic and its content is properly optimized, we leave it as it is. No use fixing what isn’t broken.

- If the page was receiving poor organic traffic, we manually review the page. Depending on which has better potential as the primary page, it may be redirected or have other pages be redirected to it.

- If the page was receiving no traffic but has backlinks, we give the code 301. We redirect the user to the most relevant page as we wanted to keep the link juice, which is one important resource for a website.

- If the page was receiving no traffic and has no links but has solid and well-written content, we merge the content with a more relevant page.

- If the page was receiving no traffic, no links, and has none or poor content, we do not index the page to Google, giving it the code no index.

- Lastly, if the page was an error 404, then we redirect the user to the most relevant page available on the website.

On-Page Content Optimization (Headings, h1, h2, h3 to h6)

The next thing that we found lacking in the website was their on-page content. Seeing as it’s mostly about images and graphics, there was not much text. Because of this, there were barely any keywords that Google can use to improve the page’s ranking.

We did an extensive Keyword Analysis to find out the relevant keywords for each page. Our content writers either inserted keywords in the pages or created content that were both relevant and of high-quality. The headings, subheadings (h2 to h6), meta-descriptions, and URLs were also optimized to improve their ranking.

The site had another recurring issue that needed to be fixed: Duplicate Content. There were several pages that had this issue. Duplicate content can slightly impact search engine rankings as Google finds it difficult to choose which version is more relevant to a user’s search query. This phenomenon is also called Keyword Cannibalization where two of a website’s pages are competing over the same keyword.

To avoid keyword cannibalization, we created Keyword Clusters which allowed us to separate pages that were competing against each other.

Site Speed

One factor that truly hinders the User Experience and, in effect, the bounce rate is the site speed. Because of the sheer number of images that the site has on each page, there was a long loading time that needed to be mitigated. We lowered the resolution and minimized the size of the images so that the website would not be too heavy to load. This sped up the website considerably.

Mobile-Responsiveness

As we live in the age of mobile phones, it is crucial that all pages of the site be responsive to users accessing the website through their mobile. However, the website was not optimized for mobile users. The desktop version was as heavy as the mobile version which resulted in long loading times.

To fix this problem and improve the performance of the website, we removed a few parts of the website for the mobile version. It was crucial that we optimize the website for mobile users instead of desktop versions. For example, the pages were made to fit the smaller screens of mobile phones. We also minimized images and lowered the resolution for mobile users to view the website without too long of a waiting time.

Website Structure

One thing we noticed is that the client’s website was hard to navigate through and most of the poor performing pages had a very high crawl-depth (it would take many clicks to reach the page). The structure was convoluted and the process to get from one page to another was too complicated. To fix this, we changed the entire website structure. We added sidebars, footers, corrected the paginations and created a related articles section. This made it easier for the user to navigate and view more pages. This also meant that the Google bot will be able to reach the pages with less clicks, assigning the page a higher importance value.

Technical Aspects

We checked through a 240 point technical SEO checklist. Some of them being:

- Google Search Console (GSC)

Google Search Console is a free web service provided by Google for webmasters to submit their webpages to be indexed. It is important for any website to be registered to Google Search Console to identify any issues GSC may have picked up such as indexation errors, crawl rate, mobile issues etc.

- Google Analytics

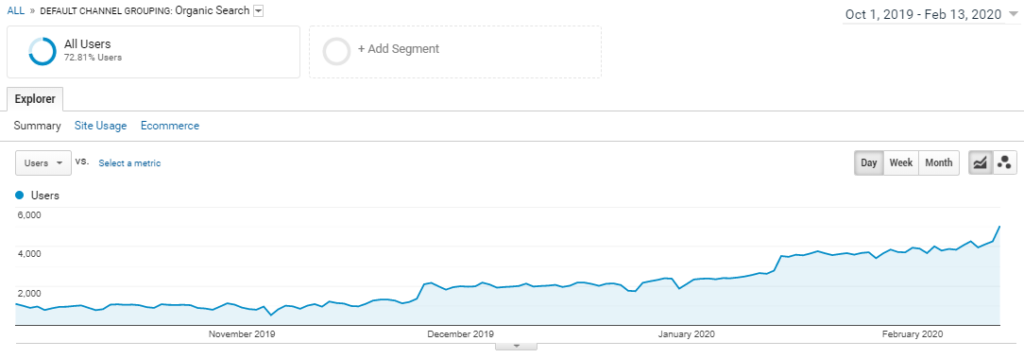

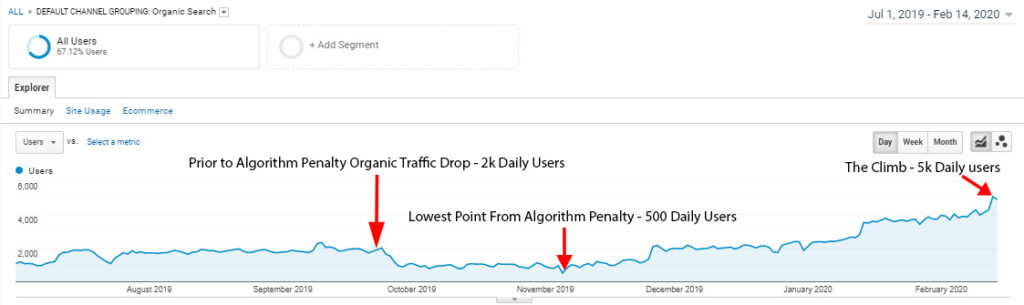

*screenshot is a summary of the client’s site organic traffic from Google Analytics*

We carefully monitored the organic traffic from Google Analytics to identify where the traffic losses came from, which pages were affected and analysed the data to see what could be the cause. In most cases, we noticed that the pages suffering from organic traffic loss also had below-average user signals performance metrics.

Results

Below are the results that we delivered for the client. Data is derived from Google Analytics.

Online Traffic

The online traffic of the site began declining in June 2019, reaching an all-time low in October 2019 at 22, 613/month. In less than a year, the site was able to recover their lost web traffic. In fact, they gained more online visitors in a month. Today, the site receives almost 120, 000 views a month.